Humans of the Data Sphere Issue #6 January 14th 2025

Your biweekly dose of insights, observations, commentary and opinions from interesting people from the world of databases, AI, streaming, distributed systems and the data engineering/analytics space.

Welcome to Humans of the Data Sphere issue #6!

First, is this our future of AI agents?

Quotable Humans

Marc Brooker on why snapshot isolation is a sweet spot: It’s a crucial difference because of one of the cool and powerful things that SQL databases make easy:

SELECTs. You can grow a transaction’s write set withUPDATEandINSERTand friends, but most OLTP applications don’t tend to. You can grow a transaction’s read set with anySELECT, and many applications do that. If you don’t believe me, go look at the ratio between predicate (i.e. not exact PK equality)SELECTs in your code base versus predicateUPDATEs andINSERTs. If the ratios are even close, you’re a little unusual. …This is where we enter a world of trade-offs3: avoiding SI’s write skew requires the database to abort (or, sometimes, just block) transactions based on what they read.Charlie Marsh: It was really valuable for me to stay at a single company long enough to live with the consequences of my own engineering decisions. To come face-to-face with my own technical debt.

Viktor Leis shares the results of a panel discussion on under-researched database problems:

One significant yet understudied problem raised by multiple panellists is the handling of variable-length strings. … Dealing with strings presents two major challenges. First, query processing is often slow due to the variable size of strings and the (time and space) overhead of dynamic allocation. Second, surprisingly little research has been dedicated to efficient database-specific string compression. Given the importance of strings on real-world query performance and storage consumption, it is surprising how little research there is on the topic (there are some exceptions).

While database researchers often focus on database engine architectures, Andy argued that surrounding topics, such as network connection handling (e.g., database proxies), receive little attention despite their practical importance. Surprisingly, there is also limited research on scheduling database workloads and optimizing the network stack, even though communication bottlenecks frequently constrain efficient OLTP systems.

Mai-Lan Tomsen Bukovec shared the basics of the Principal Engineer Roles Framework: if there is one thing that I have learned, it is that when you run complex systems at scale, you must think deeply about how teams work. It’s not enough to be get into the details about what you build. You have to spend lots of time engineering, iterating, and improving how you and your team operate.

Andy Pavlo: DuckDB has entered the zeitgeist as the default choice for someone wanting to run analytical queries on their data. Pandas previously held DuckDB's crowned position. Given DuckDB's insane portability, there are several efforts to stick it inside existing DBMSs that do not have great support for OLAP workloads. This year, we saw the release of four different extensions to stick DuckDB up inside Postgres.

Martin Casado starts a fun thread of often-dangerous tech patterns (definitely ripe for some disagreement, get popcorn and remember he said *almost*):

Systems ideas that sound good but almost never work: - DSLs - Live migrating process state - Anomaly detection - Control loops responding to system load - Multi-master writes - p2p cache sharing - Hybrid parallelism - Being clever vs over-provisioning .. What else?

Nikita Shamgunov: Database land: * Unified OLTP and OLAP (guilty) * HTAP (feature not a market). Works as a feature though * API compat for databases (https://babelfishpg.org is the most recent flop) * Database migrations (from Oracle, Teradata, etc) * True geo distribution for a database (we will see about d-sql) * Shared nothing db architecture (sorry, I know you are an investor) P.S. Multi-master may actually work (https://dl.acm.org/doi/10.1145/3626246.3653377…). I'm looking into it.

Sunny Bains kicks off some discussion of SQL and scaling: When someone tells you that SQL doesn’t scale, they are either selling you a KV store, they read it on the Internet somewhere or are totally clueless and want to sound smart. I’ve never understood what SQL has to do with scaling.

Ovais Tariq: Almost all high-end services built on SQL database such as Facebook, Uber, etc restrict the SQL language exposed to the users to the most basic set. Transactional databases scale if you reduce the surface area of features of the SQL language is what I am getting at.

Sam Lambert: it’s also why scaling while doing things like postgres extensions is a pipe dream. too much entropy.

Derrick Wippler: THIS! Also, So many fail to realize that for each JOIN you add there is a 10x or more performance penalty. You can't defy the laws of physics. You CAN use SQL to scale, you just can't abuse it as if these features don't have a cost.

James Cowling: I often see code that assumes database auto-increment ids are monotonically increasing. e.g., AUTO_INCREMENT in MySQL, SERIAL in Postgres. They are not. They may have gaps plus don't reflect commit order, so may show up out of order. It's easy to write bugs that walk over a table assuming this is an actual ordering.

Sunny Bains: The problem around auto-increment is due to historic reasons. Up to SQL-92 there was no mention of sequences/auto-incrementt. Every db vendor had their own syntax and implementation quirks. SQL 2003 was the first to introduce sequences officially and monotonicity was not actually specified (AFAIR, I remember discussing this when I rewrote InnoDB’s auto-increment handling). SQL 2008 addressed the monotonicity part but AFAIK doesn’t mandate it either, allows for non-monotonicity, values are allowed to be generated out of order when used concurrently. Relying on any specific behaviour is not based on any standard, it’s vendor specific only.

Piyush Goel: This happens especially when you perform batched inserts in a concurrent manner. The engine creates blocks of ids that are spaced out to avoid conflicts. I made a terrible blunder in estimating the size of a critical table by looking at the auto-inc id. I only realized much later that it didn’t tally with the count(*) value and went on a deep-dive to understand the auto-inc behavior.

Sunny Bains: When writing multi-threaded programs remember to test it on HW with multiple sockets. Performance drop due to shared state across sockets is quite depressing to watch. Modern CPUs have made it even more interesting with all kinds of Ln cache sharing combinations. I’ve seen many examples of “it scaled really well on my Mac/PC/laptop”.

Shaun Thomas: The Numa effect is real. It's one reason I test with and without CPU pinning in virtual environments, because I want to see how bad the degradation is if the load migrates to a cold socket.

Yingjun Wu: While many database vendors are competing in the analytics space (trying to be the next Snowflake or Databricks!), not many are going after Redis. The truth is, a lot of companies complain that Redis is too expensive. I predict that, in 2025, some players will emerge to challenge Redis with an ‘S3 as the primary storage’ architecture.

Yingjun Wu: An interesting observation I've made in the database area is that the current trend in #AgenticAI seems to benefit row stores more than columnar stores. Over the past few years, the focus has been on OLAP, with all database vendors racing to build or enhance their columnar stores. However, what today’s agentic AI actually needs is a search index for a knowledge cache - sth that can be efficiently maintained in a key-value store, a search engine, or even a system like

@PostgreSQL. What goes around comes around... and then around…

Marc Brooker: To understand the value of backoff (e.g. exponential backoff), it's worth understanding the distinction between 'open' and 'closed' systems. From the classic paper "Open versus Closed: A Cautionary tale" https://www.usenix.org/conference/nsdi-06/open-versus-closed-cautionary-tale

Marc Brooker: For immutable or versioned data? Erasure coding. Constant work, tunable cost/latency trade-off, operational benefits, and (relatively) simple client-side implementation. Treat slow responses as erasures.

Jeremy Morell: The "use tail sampling for your traces" advice should probably also come with a strong "you must be this tall to ride this ride" caveat Simple if you have a monolith, challenging if you have a cluster of microservices, jesus take the wheel if you have geo-distributed traces crossing regions.

Ivan Burmistrov: IMO sampling is one of the most undercovered topics in the whole o11y area. There are either claims "sampling is not needed" (confusing, untrue), or this one you mentioned. There are no proper guidances, examples of how to do it properly.

Ryanne Dolan: Meta and Salesforce both just announced they have halted hiring of SWEs cuz AI has replaced them. And you STILL think your job is safe??

Gergely Orosz: Anyone saying that GenAI could replace software engineers don't understand how software is created (and operated.) Tool innovations have always make the process of building software faster, cheaper: GenAI and AI agents will also do this. These are tools and efficiency gains.

Kelsey Hightower: DeepSeek, a LLM trained for a fraction of the cost of GPT-Xx models, in 2 months for 6 million, on limited GPUs due to export restrictions, and competing head to head. This is crazy. If these numbers hold up, consider the game changed.

Jaana Dogan: Product flywheels are so much easier to create if you have decent infrastructure that allows you to recompose and create product experiences quickly. It's a lesson hard to learn if you never had a chance to work for a company with the right building blocks.

Gwen Shapira: Everyone: LLMs are so intelligent that they'll take our jobs! 2025 is the year of agents and robots! Roomba: inhales 2 usb cables and a cat toy. Proceeds to get stuck humping my Poang chair.

Ethan Mollick muses on the recent advancements in AI models:

As one fun example, I read an article about a recent social media panic - an academic paper suggested that black plastic utensils could poison you because they were partially made with recycled e-waste. A compound called BDE-209 could leach from these utensils at such a high rate, the paper suggested, that it would approach the safe levels of dosage established by the EPA. A lot of people threw away their spatulas, but McGill University’s Joe Schwarcz thought this didn’t make sense and identified a math error where the authors incorrectly multiplied the dosage of BDE-209 by a factor of 10 on the seventh page of the article - an error missed by the paper’s authors and peer reviewers. I was curious if o1 could spot this error. So, from my phone, I pasted in the text of the PDF and typed: “carefully check the math in this paper.” That was it. o1 spotted the error immediately (other AI models did not).

In fact, even the earlier version of o1, the preview model, seems to represent a leap in scientific ability. A bombshell of a medical working paper from Harvard, Stanford, and other researchers concluded that “o1-preview demonstrates superhuman performance [emphasis mine] in differential diagnosis, diagnostic clinical reasoning, and management reasoning, superior in multiple domains compared to prior model generations and human physicians."

Potentially more significantly, I have increasingly been told by researchers that o1, and especially o1-pro, is generating novel ideas and solving unexpected problems in their field (here is one case).

…the lesson to take away from this is that, for better and for worse, we are far from seeing the end of AI advancement.

Gwen Shapira: I believe that this time next year, we’ll look at AI validation and observability the same way we look at unit tests today - an essential tool for continuous improvement process.

Charity Majors and Phillip Carter: One disappointing aspect of the current boom is how many companies are being incredibly closed-lipped about the practical aspects of developing with LLMs. Most leading AI companies seem reluctant to show their work or talk about how they resolve the contradictions of applying software engineering best practices to nondeterministic systems, or how AI is changing the way they develop software and collaborate with each other. They act like this is part of their secret sauce, or their competitive advantage.

Melanie Mitchell muses on whether o3 has solved abstract reasoning or not:

The purpose of abstraction is to be able to quickly and flexibly recognize new situations using known concepts, and to act accordingly. That is, the purpose of abstraction—at least a major purpose—is to generalize.

The o1 and o3 systems are a bit different. They use a pre-trained LLM, but at inference time, when given a new problem, they do a lot of additional computation, namely, generating their chain-of-thought traces.

One set of researchers showed, however, that changing the game just by moving the paddle up a few pixels resulted in the original trained system performing dramatically worse. It seems that the system had learned to play Breakout not by learning the concepts of “paddle”, “ball”, or “brick”, but by learning very specific mappings of pixel configurations into actions. That is, it didn’t learn the kinds of abstractions that would allow it to generalize.

I have similar questions about the abstractions discovered by o3 and the other winning ARC programs.

Interesting topic: AI agents and the recent advancements in AI models

Since issue #5, two interesting blog posts have been written about AI agents and many predict that 2025 will be the year of the AI agent.

Anthropic wrote Building Effective Agents.

Chip Huyen wrote Agents.

Ethan Mollick has also recently published a number of excellent blog posts:

In this section of issue #6, we’ll explore what AI agents are as well as some of the challenges involved.

At the most abstract level, Chip Huyen defines an agent in her Agents blog post:

An agent is anything that can perceive its environment and act upon that environment. Artificial Intelligence: A Modern Approach (1995) defines an agent as anything that can be viewed as perceiving its environment through sensors and acting upon that environment through actuators. This means that an agent is characterized by the environment it operates in and the set of actions it can perform.

Taking actions is a defining characteristic of an AI agent compared to just an LLM that provides textual or graphical responses to prompts. Ethan Mollick notes that much of the work in modernity is digital and something that an AI could plausibly do.

The digital world in which most knowledge work is done involves using a computer—navigating websites, filling forms, and completing transactions. Modern AI systems can now perform these same tasks, effectively automating what was previously human-only work. This capability extends beyond simple automation to include qualitative assessment and problem identification.

Anthropic discuss the definition of an agent in their Building Effective Agents blog post:

"Agent" can be defined in several ways. Some customers define agents as fully autonomous systems that operate independently over extended periods, using various tools to accomplish complex tasks. Others use the term to describe more prescriptive implementations that follow predefined workflows. At Anthropic, we categorize all these variations as agentic systems, but draw an important architectural distinction between workflows and agents:

Workflows are systems where LLMs and tools are orchestrated through predefined code paths.

Agents, on the other hand, are systems where LLMs dynamically direct their own processes and tool usage, maintaining control over how they accomplish tasks.

This seems like an important distinction to make. A workflow is a kind of static flow chart of branches and actions that constrain what the AI can do. It’s prescriptive, more predictable but less flexible. A true AI agent on the other hand determines its own control flow, giving it the freedom to plan and execute flexibly, but comes with additional risk. Anthropic note that you should choose the simplest option possible, but when more complexity is needed then a workflow or agent may be required:

…workflows offer predictability and consistency for well-defined tasks, whereas agents are the better option when flexibility and model-driven decision-making are needed at scale.

…agents can be used for open-ended problems where it’s difficult or impossible to predict the required number of steps, and where you can’t hardcode a fixed path.”

In a practical sense, an AI agent is an LLM designed to satisfy specific goals, using a suite of tools to help it interact with the real-world in order to satisfy those goals. Chip Huyen classifies the tools into three categories:

Depending on the agent’s environment, there are many possible tools. Here are three categories of tools that you might want to consider: knowledge augmentation (i.e., context construction), capability extension, and tools that let your agent act upon its environment.

In order to satisfy a goal, an agent must use a combination of:

Effective planning and reasoning.

The agent makes a plan of steps it needs to perform in order to satisfy the goal.

Accurate tool selection and execution.

The LLM may need to make API calls for information retrieval and/or makes changes or take actions in the real world.

Self-reflection and evaluation.

At every step, the agent should reflect on what it has planned and the results it has received to ensure it is still doing the right thing.

AI agents are stochastic systems which add a new flavor of risk to every step. Many agent systems require multiple steps to satisfy a goal and errors in each step can compound. This is one of the defining characteristics of AI agents that the agent designer must account for. In fact, accounting for all the failure modes of an agent is where the highest learning curve is found as well as most of the developmental cost.

As a distributed systems engineer, I’m probably more on the paranoid side of the risk-awareness spectrum. Through that lens I see all manner of challenges to overcome when building AI agents:

Effective Planning:

AI agents must create plans that align with their goals while adapting to dynamic environments and incomplete information. Self-reflection and being able to change course may be necessary.

Plans may need to be evaluated to ensure that they are feasible, efficient, and contextually appropriate. Typically, we can view an agent as a multi-agent system where planning, execution and evaluation are carried out by separate agents that collaborate.

There are a number of things that can go wrong in the planning phase:

The agent does not revise plans when new information contradicts initial assumptions.

The agent gets stuck in loops, revisiting the same steps repeatedly without making progress.

The agent sets inappropriate or harmful goals due to poorly defined prompts or objectives.

Accurate Tool Selection and Usage:

Agents need to identify the correct tools (e.g., APIs, models) for a task and invoke them properly.

Common issues include:

Invoking the wrong tool. Or failing to consider multiple tools or approaches, leading to suboptimal performance.

Providing incorrect or incomplete inputs which can create wrong or suboptimal results.

Hallucinating non-existent tools.

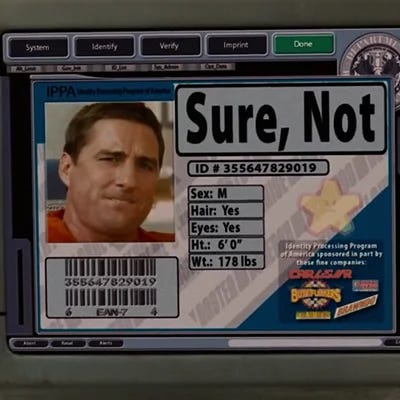

Failing to recognize when tool outputs indicate anomalies, errors, or limitations (as in the humorous Idiocracy clip at the top of the issue).

Reasoning and Decision-Making:

Agents may struggle to interpret the results of their actions or external tool outputs.

Errors in reasoning can lead to invalid conclusions, impacting subsequent actions (the compounding of errors). Some reasoning errors may result from forgetting critical context or information needed to make accurate decisions.

Failure Modes in Execution:

Agents can fail to execute actions correctly, leading to unintended consequences. The first challenge is detecting when actions are executed incorrectly and the second challenge is remediating such actions.

Difficulty handling edge cases or unexpected outcomes, or handling rare cases as general cases.

Monitoring and auditing agents may also be challenging. Not only detecting when things go wrong, but also detecting bias and justifying why certain actions were taken.

I could go on but you get the idea. I imagine that a lot of experimentation and iteration will go into AI agent development and getting that last 20% of completeness and polish could be really time consuming. Chip Huyen covers a lot of this in her framing of AI agent development. The Anthropic post also steers you the route of choosing the simplest agent, or no agent at all:

When building applications with LLMs, we recommend finding the simplest solution possible, and only increasing complexity when needed. This might mean not building agentic systems at all.

…

Start with simple prompts, optimize them with comprehensive evaluation, and add multi-step agentic systems only when simpler solutions fall short. When implementing agents, we try to follow three core principles:

Maintain simplicity in your agent's design.

Prioritize transparency by explicitly showing the agent’s planning steps.

Carefully craft your agent-computer interface (ACI) through thorough tool documentation and testing.“

AI agents and agentic systems are an emerging practice and I have to agree that 2025 will indeed be the year of the AI agent given the promise that AI agents hold and the rapid improvements in model capabilities.

However, with that said, I do have some serious concerns and I believe there will be two constraining aspects of AI agents that present a challenge to widespread adoption:

Reliability. There are so many failure modes and even the mitigations are usually run by LLMs and therefore have their own failure modes. Errors compound, detection and mitigations themselves may not be highly reliable.

Cost. Agents may require multiple reasoning steps using the more powerful models. All this pushes up the cost. With higher costs come higher demands for the value proposition. Of course with the arrival of DeepSeek v3, maybe 2025 will also be the year of the more efficient LLM.

Chip Huyen noted in her post:

Compared to non-agent use cases, agents typically require more powerful models for two reasons:

Compound mistakes: an agent often needs to perform multiple steps to accomplish a task, and the overall accuracy decreases as the number of steps increases. If the model’s accuracy is 95% per step, over 10 steps, the accuracy will drop to 60%, and over 100 steps, the accuracy will be only 0.6%.

Higher stakes: with access to tools, agents are capable of performing more impactful tasks, but any failure could have more severe consequences.

As AI becomes more and more capable but with non-trivial associated costs we may enter an age where cost efficiency is the primary decision maker about when to use AI vs when to use a human or not do the thing at all. If both AI and human workers can execute a digital task at similar levels of competence then cost efficiency becomes the defining question. François Chollet made this point over the holiday period:

One very important thing to understand about the future: the economics of AI are about to change completely. We'll soon be in a world where you can turn test-time compute into competence -- for the first time in the history of software, marginal cost will become critical. Cost-efficiency will be the overarching measure guiding deployment decisions. How much are you willing to pay to solve X?

In this early phase, agents are likely best suited to narrow tasks that do not involve important actions such as bank transfers, costly purchases, actions that cannot be undone without cost or negative impact. Ethan Mollick noted that:

Narrow agents are now a real product, rather than a future possibility. There are already many coding agents, and you can use experimental open-source agents that do scientific and financial research.

Narrow agents are specialized for a particular task, which means they are somewhat limited. That raises the question of whether we soon see generalist agents where you can just ask the AI anything and it will use a computer and the internet to do it. Simon Willison thinks not despite what Sam Altman has argued. We will learn more as the year progresses, but if general agentic systems work reliably and safely, that really will change things, as it allows smart AIs to take action in the world.

It will be fascinating to watch how agentic systems evolve as a category. We may see the adoption of agents happen with some ebb and flow but with a general trend towards greater adoption, as:

The capabilities and in-production reliability and cost become known.

As patterns and practices evolve.

And of course, how the models themselves progress both in terms of capabilities but also cost efficiency.

I’ll finish with one more quote from Ethan Mollick:

Organizations need to move beyond viewing AI deployment as purely a technical challenge. Instead, they must consider the human impact of these technologies. Long before AIs achieve human-level performance, their impact on work and society will be profound and far-reaching.